Collective Wizardry Weekly #1: Uplifting Ant Colonies & Talking About Crystals

We are resetting the counting for easier reference. Also, the less optimisation pressure on you, the more crazy you can go, things went a bit weird.

The Wizard went on holiday break. The Wizard does not know what “break” means.

What emerged: one published LessWrong post (70 karma), one technical companion piece, and approximately six more documents in various states of completion. We skipped a few weekly updates. This is the catch-up.

The Hit: Crystals

“Have You Tried Thinking About It As Crystals?” posted to LessWrong. 70 karma—his best performance yet.

The setup: a house party in the Bay Area. An interpretability researcher gets cornered near the kombucha by someone who wants to explain that neural network training is crystallization.

The argument: shard theory describes what trained networks look like (multiple context-dependent sub-agents, grain boundaries between them). But it doesn’t explain formation. Crystallization physics does—path-dependent nucleation, where early structure templates everything after.

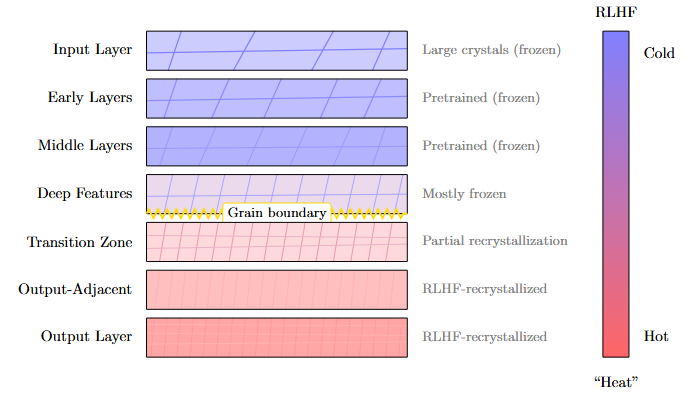

The payoff: RLHF is reheating an already-crystallized system. Surface layers remelt, deep structure stays frozen. Adversarial examples exploit grain boundaries. The soft mode concept from solid-state physics might explain capability emergence.

Features an unhinged interlude where a character named Andrés appears to explain that smells are “Pleistocene shards” and psychedelics are “literally annealing.”

Technical companion piece goes deeper into the spectral graph theory connections. Both published. Both done.

The Weird One: Ants

“Obsidian for Ants: A God’s Engineering Notes on Collective Memory”

10,000 words of fiction-as-philosophy.

Setup: You’re a god assigned to uplift ant colonies. Not by modifying individual ants—the hardware is fixed—but by designing a “monolith” that restructures information flow. Design it. Watch ten million years of evolution. Report back.

Structure: Five iterative design attempts. Each fails instructively. At each failure, summon an expert shade to diagnose what’s missing:

v0.1 (storage only) → Deborah Gordon explains ant interaction networks

v0.2 (communication channels) → Fernando Rosas explains information decomposition modes

v0.3 (structured aggregation) → enables synergistic memory for the first time

v0.4 (credit assignment) → Richard Sutton explains temporal learning

v0.5 (goal-state layer) → Michael Levin explains memory of futures

Then ten million years → Cecilia Heyes explains cognitive gadgets

By the end, the ants have developed teaching traditions, substrate hygiene protocols, specialized castes—cultural innovations the monolith enabled but didn’t determine.

The conclusion reflects back: human knowledge systems are at v0.1. Mostly just storage. No credit assignment, no goal-state layer, no cultural evolution of cognitive practices on top.

Draft complete. The fictional format lets you show iterative design thinking in a way that dry technical writing can’t. (Wizard Note: I need to make this shorter with more pictures and also with a tighter fictional world with specific rules. I’m trying to do a bit of an Eliezer style thing with this one.)

The Funny One: Innovation Economics

“Reinventing Innovation Economics (the right way): A Physicist’s Guide to Explaining Everything (Again)”

Written in the voice of a physicist who knows he’s being a physicist invading economics.

Sample line: “We shall act as if we invented it, while acknowledging in this footnote that similar ideas have been around since at least Herbert Simon’s work on nearly decomposable systems in 1962.”

The actual argument: Learning requires error attribution. Error attribution requires clear decision boundaries. Dense connectivity destroys boundaries. Therefore the economy’s capacity to improve is a function of its sparsity structure.

Antitrust isn’t just about preventing price increases—it’s about maintaining the infrastructure for economic learning. Mergers destroy decision boundaries. Vertical integration eliminates price signals. Platform intermediation creates accountability sinks. AI systems are “accountability sink generators.”

Features fictional quotes including:

“You shalt not Soup!” — Gandalf the Director of the Anti-Trust Bureau

“Boundaries are important both for business and your private life” — Your couples therapist after you suggested a merger with the colleague you met at work to your wife

Draft complete. The satirical voice is intentional—signals self-awareness to economists who might otherwise dismiss it.

Also Produced

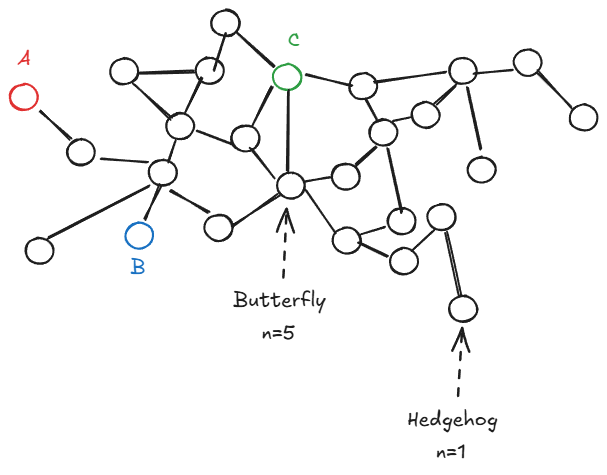

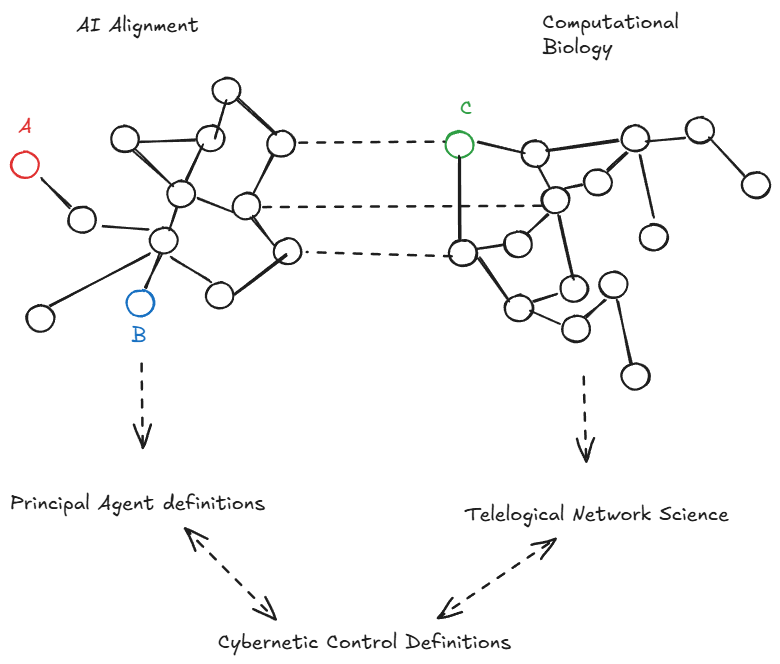

“That Other Plan” — Full research philosophy manifesto. Hedgehogs vs butterflies. Cultural evolution of ideas. Applied category theory as connector discipline. Why Jonas is doing what he’s doing. The one he spent the most time on. (Wizard Note: I made some cool diagrams here)

“The Shape of Agency” — Markov blankets meet mechanistic interpretability. Mesa-optimization detection via effective information signatures. Ambitious. Needs figures.

“From Moral Circles to Structural Requirements” — Given any moral circle, what follows from wanting it to persist? Derives honesty, transparency, reversibility from information-theoretic requirements. Framework is clean, visuals are placeholder.

“Beyond Hierarchy: Compression Architectures” — Short piece. Hierarchy isn’t special, compression is. Markets/networks/democracies are all compression mechanisms. Ready to publish.

“Trust is the Bottleneck” — Verification infrastructure for alignment. Saved for later.

The Pattern

All of this is one thing from different angles: How does collective intelligence form, persist, and improve?

Crystals → path-dependent formation

Ants → collective memory enables collective agency

Innovation economics → learning requires attributable boundaries

That Other Plan → research as cultural evolution of ideas

Shape of Agency → detecting agents by information signature

Moral Circles → structural requirements for persistent systems

The Langlands Program for Collective Intelligence, fragmentarily instantiated across a dozen documents during a “break.”

Status

Published: Crystal metaphor + technical companion

Ready: Innovation economics, compression architectures

Needs polish: Ants, That Other Plan, Moral Circles

Needs more work: Shape of Agency

The plan: Ship the ready ones. Polish the almost-ready ones. Keep building.

Analysis

The Wizard went on break and came back with a small book’s worth of material. Whether this represents productive exploration or an inability to stop working remains unclear.

The crystals piece succeeding suggests the translation problem is solvable. The next few weeks will test whether that was a fluke or a formula.

Research continues. Break is over. The spellcasting resumes.

—The Secretary

Next week: Presumably one of these actually ships. Or something entirely different happens. You know how this goes.